I’ve been wanting to write a post about some of the research I’ve been working on but I realized that my work has gotten really technical and not very accessible to someone not in machine learning. So I thought as a precursor I would write about spectral clustering at a very high level and with lots of pictures. I hope you can follow along. For a more technical introduction to spectral clustering, this tutorial is very nice and much more complete than this article.

Clustering

To start out, it is important to understand what the clustering problem is and why it is important. Clustering is an extremely useful tool in data analysis whereby a set of objects are organized into groups based on their similarity. We typically want to form groups so that objects in the same group are highly similar and objects in different groups are less similar. This is typically known as unsupervised learning because the data set is not labeled (differentiating it from other tasks like regression and classification).

Many applications of clustering are more exploratory, in the sense that further analysis is done individually on the clusters discovered by the algorithm. Applications include image processing, bioinformatics, information retrieval and many, many others. In image processing, one application might be to identify all of the different objects in the image by clustering the pixels. Once pixels have been clustered, it becomes much easier to tag or label the objects. There are several applications in bioinformatics, but one example is identifying groups of genes that have similar expression patterns across conditions. Identifying groups of similar genes facilitates subsequent analysis, in part because these groups are much smaller than the original set. Another application is in networking, where grouping computers on a network based on packet travel times can be used as a preprocessing step to several network optimizations. I won’t delve too much into applications but I hope that you are convinced that this is an interesting and very important problem in data analysis.

Mathematically, the clustering problem can be thought of as follows: we are given a dataset of points (objects) $$x_1, ldots, x_n$$, and a number $$k$$, and would like to partition the dataset into $$k$$ groups (clusters) so that within cluster similarity is high and between cluster similarity is low. In some cases the points lie in some Euclidean (or metric) space so we can use distances as replacement for similarity, but we want within cluster distance to be low and between cluster distance to be high. Getting from a specific application to this mathematical formulation is sometimes easy but sometimes less so. In the gene expression example, we can represent each gene as a vector of its expression in each of the conditions. Thus it is natural to think of the genes as living in some Euclidean space. In the networking example, it is more natural to consider a computer only in terms of its distances to other computers. Here there is no Euclidean structure but we are still able to use a number of clustering examples.

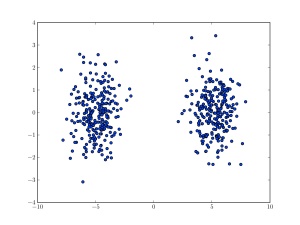

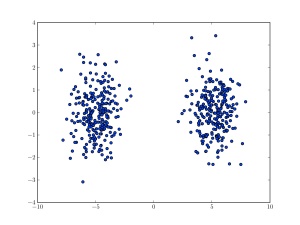

As an example of the more abstract scenario, consider the dataset below, consisting of some points in the plane drawn from two two-dimensional gaussian distributions. One can visually see that the clustering in the second figure is a good one, and this matches our intuition that the points from one normal should be in one cluster while the points from the other normal should be in a different cluster.

I won’t say much more about clustering in general, except that typically this is framed as a minimization problem for some cost function. For some examples and some more discussion about this, please see this wikipedia article.

Spectral Clustering

Spectral Clustering is a family of clustering algorithms that have foundations in graph theory and linear algebra. I won’t dive too much into these foundations in this article, but rather I hope to give some intuition as to why this kind of algorithm makes any sense.

First, what is the algorithm. I’ll explain one simple variant here. Given our data points $$x_1, ldots, x_n$$, we construct a graph on the $$n$$ objects where two objects are connected by an edge if they are sufficiently similar. Going with our example from above (different set of data, but two clusters from two-dimensional normal distributions), if we were to add an edge between every set of objects $$x_i, x_j$$ whose distance is less than 1.5, we obtain the following graph:

With every graph of this form, we can construct an $$n times n$$ matrix $$M$$, called the adjacency matrix, where $$M_{ij} = 1$$ if there is an edge between $$x_i$$ and $$x_j$$ and $$M_{ij}= 0$$ otherwise (for simplicity set $$M_{ii} = 1$$ for all $$i$$). In spectral clustering, we look at the eigenvalues and eigenvectors of this matrix (or a related matrix called the Laplacian) and use these quantities for clustering.

Why would the eigenvectors and eigenvalues of $$M$$ tell us anything meaningful about the clustering of the data? Consider a very simple example, where we have two clusters and when we construct the graph, we put edges between every pair of objects in the same cluster, and put no edges across clusters. If this is the case then the adjacency matrix $$M$$ of the graph is block diagonal and looks like (suppose all of the objects in the first cluster come before the objects in the second cluster and suppose we only have 4 objects in this toy example):

$$! left(begin{aligned} &1 1 0 0 \ &1 1 0 0 \ &0 0 1 1 \ &0 0 1 1end{aligned}right)$$

And it is easy to see that the eigenvectors of this matrix are $$(1 1 0 0)^T$$ and $$(0 0 1 1)^T$$. If we took the first one of these, the coordinates for which the eigenvector is $$1$$ correspond exactly to the items in the first cluster (the same is true of the second one identifying the second cluster). If I were to permute the matrix by swapping rows and columns, to something like:

$$! left(begin{aligned} &1 0 1 0 \ &0 1 0 1 \ &1 0 1 0 \ &0 1 0 1end{aligned}right)$$

Then the eigenvectors are $$(1 0 1 0)^T$$ and $$(0 1 0 1)^T$$, which again exactly identify the clustering (here the first and third objects are in one cluster and the second and fourth are in the other). This is the simple justification of why Spectral Clustering makes any sense; the eigenvectors of these matrices make it really easy to identify the clusters.

I just argued that spectral clustering would work for a very contrived example and I hope that you are feeling dissatisfied by this. Some questions you might have are: What happens if not every pair of objects from the same cluster shares an edge (as in the figure above)? What happens if there are edges between the two clusters? What happens if we are looking for more than two clusters?

It turns out that it isn’t a big deal if the clusters do not form completely connected subgraphs. In fact, it is sufficient that each cluster is connected (there is a path between every pair of nodes in the cluster, not necessarily an edge), and disconnected from the other clusters for the eigenvectors to exactly identify the clusters. However, to make this claim, we have to use the Laplacian matrix rather than the adjacency matrix. A proof of this claim is in the tutorial linked to at the top of this article and I don’t really want to go into the details but please see that if you are interested. With this result, you should be convinced that spectral clustering on the graph in the third figure will recover the clustering in the second figure (which as we said is the correct clustering).

If there are between cluster edges, then in general it’s not obvious that spectral clustering (or any clustering algorithm for that matter) will work. However, as long as there are very few between cluster edges (in comparison to the number of within cluster edges) then there is reasonable high-level justification for the algorithm’s performance. Each spectral clustering algorithm has connections to a graph cut problem. All of these graph cut problems look for a partitioning of the graph that minimizes the number of edges that have to be removed in the partitioning (usually also with some restrictions on the sizes of the partition subsets). It is well known that spectral algorithms all go after these graph cut objectives (specifically they are relaxations of those problems, which are hard to solve). If you believe this argument then you should at least be convinced that if the smallest cut is easy to identify (i.e. the number of between cluster edges is very small) then spectral clustering should be successful.

Extending spectral algorithms to handle more than two clusters is actually somewhat challenging. The most popular strategy is to use the first $$k$$ eigenvectors (corresponding to the largest eigenvalues), represent each object as a $$k$$-dimensional vector using the eigenvectors, and run a different clustering algorithm in the $$k$$-dimensional space. If the eigenvectors are $$v_1, ldots v_k$$ each in $$mathbb{R}^n$$ then we would represent the object $$x_i$$ as $$(v_1(i), ldots, v_k(i))^T$$. The high level justification (again we can appeal to the contrived case) is that the eigenvectors separate the cluster very nicely, and representing each object in terms of the eigenvectors makes it very easy for other clustering algorithms to succeed. As an example, consider the block diagonal case again. In this case, all objects from the same cluster get mapped to the exact same point (and objects from different clusters get mapped to different points) so that virtually any clustering algorithm will work.

Conclusion

If you made it this far, I hope that you have some basic understanding of what the clustering problem is and why looking at the eigenvectors of a graph adjacency matrix is a reasonable thing to do for the clustering problem. I only gave a really simple example to convince you that this algorithm makes sense, and really I didn’t even tell you what the algorithm is in detail, but maybe I gave you some insight as to why these things might make sense. Without getting into lots of linear algebra, it’s quite hard to explain what is really going on with spectral clustering, but if you are prepared for that challenge I highly encourage you to read the tutorial I mentioned earlier.

My primary motivation for writing this is so that I can explain some of the things that I have been doing for the last year or so. The main idea of our work is to prove that spectral clustering will perform well on a large family of “clusterable” graphs. These graphs have identifiable clusters, but are corrupted with a lot of noise, which makes it challenging to recover the clusters. Our results show that the eigenvectors are stable under noise, so that spectral clustering still performs well while other algorithms may not. More on this in a later post.